Week 4: Exercises#

Exercises – Long Day#

1: Types of Matrices#

Consider the matrices:

Determine for each matrix whether it is symmetric, Hermitian, and/or normal. You may use SymPy to check if the matrices are normal. For convenience, the matrices are provided here:

A = Matrix.diag(1, 2, 3)

B = Matrix([[1, 2, 3], [3, 1, 2], [2, 3, 1]])

C = Matrix([[1, 2 + I, 3*I], [2 - I, 1, 2], [-3*I, 2, 1]])

D = Matrix([[I, 2, 3], [2, I, 2], [3, 2, I]])

Answer

\(A\) is real and diagonal and is thus automatically also symmetric, Hermitian and normal.

Answer

\(B\) is normal but not symmetric nor Hermitian.

Answer

\(C\) is Hermitian and thus also normal but not symmetric.

Answer

\(D\) is symmetric and normal but not Hermitian (for that, it has to contain real diagonal elements).

2: Hermitian 2-by-2 Matrix. By Hand#

We consider the Hermitian matrix \(A\) given by:

This exercise involves computing a spectral decomposition of \(A\), which we know exists due the Spectral Theorem (the complex case). We find this decomposition of \(A\) in three steps:

Question a#

Find all eigenvalues and associated eigenvectors of \(A\). Check your anwer using SymPy’s A.eigenvects().

Answer

\(\lambda_1 = -1\) with eigenvector \(\pmb{v}_1 = [-i,1]^T\).

\(\lambda_2 = 1\) with eigenvector \(\pmb{v}_2 = [i,1]^T\).

Remember that eigenvectors can be multiplied by any nonzero complex number. Therefore, for example, \(\pmb{v}_1 = [1, i]^T\) (which is multiplied by \(i\)) is also an eigenvector belonging to \(\lambda_1 = -1\).

Question b#

Provide an orthonormal basis consisting of eigenvectors of \(A\).

Hint

Since \(A\) is Hermitian we know that eigenvectors belonging to different eigenvalues are orthogonal.

Hint

Hence, the two eigenvectors just need to be normalized.

Question c#

This result holds for general \(n \times n\) matrices. Show that \(A = U \Lambda U^*\) if and only if \(\Lambda = U^* A U\), when \(U\) is unitary.

Hint

Multiply the equation \(A = U \Lambda U^*\) through with \(U^*\) from the left and \(U\) from the right.

Hint

Use the fact that \(U^* U = I\).

Question d#

Write down a unitary matrix \(U\) and a diagonal matrix \(\Lambda\) such that \(A = U \Lambda U^*\). This formula is called a spectral decomposition of \(A\). Check your result using the SymPy command:

A = Matrix([[0, I], [-I, 0]])

A.diagonalize(normalize = True)

Hint

First, find \(U\). The columns of \(U\) must form an orthonormal basis consisting of eigenvectors.

Hint

\(\Lambda\) needs to have eigenvalues in its diagonal. Their order must “match” the order of the eigenvectors. If you are in doubt, then calculate \(\Lambda\) via \(\Lambda= U^* A U\).

3: Orthogonality of Eigenvectors of Symmetric Matrices#

Let \(C\) be a \(2 \times 2\) real, symmetric matrix with two different eigenvalues. Show that the eigenvectors \(\pmb{v}_1\) and \(\pmb{v}_2\) corresponding to the two different eigenvalues are orthogonal, i.e., that

Hint

Since \(C\) is symmetric, we have

Now use the fact that \(\pmb{v}_1, \pmb{v}_2\) are eigenvectors.

Hint

Since the eigenvalues are real, then we get

Move all terms to the left side of the equal sign and factorize.

Hint

Now use the rule of zero product on \(\lambda_1 - \lambda_2 \neq 0\) to conclude that

4: Symmetric 3-by-3 matrix#

We are given the real and symmetric matrix

Find a spectral decomposition of \(A = Q \Lambda Q^T\). In other words, provide a real orthogonal matrix \(Q\) and a diagonal matrix \(\Lambda\) such that

or, equivalently,

is fulfilled. As in the previous exercise we know from the Spectral Theorem (the real case) that these matrices exist.

Hint

Find a matrix \( V\), whose columns are eigenvectors of \(A\).

Hint

An obvious choice is

which fulfills \( V^{-1}\, A\, V=\Lambda\) with the associated diagonal matrix

But \(V\) is not orthogonal…

Hint

\(V\) can be orthogonalized by the use of the Gram-Schmidt procedure on its columns.

Hint

Since \(A\) is symmetric, the corresponding eigenspaces are orthogonal. Therefore, in this exercise only the two-dimensional eigenspace needs to undergo the Gram-Schmidt procedure. The one-dimensional eigenspace only needs to be normalized.

Answer

This is one of several possible choices. With this choice, the diagonal matrix becomes \(\Lambda = \operatorname{diag}(-4, -1, -1)\). If you are unsure about the order of the eigenvalues in \(\Lambda\), you can compute \(\Lambda = Q^T A Q\).

5: Spectral decomposition with SymPy#

We consider the following matrices given in SymPy:

A = Matrix([[1, -1, 0, 0], [0, 1, -1, 0], [0, 0, 1, -1], [-1, 0, 0, 1]])

B = Matrix([[1, 2, 3, 4], [4, 1, 2, 3], [3, 4, 1, 2], [2, 3, 4, 1]])

A, B

We are informed that both matrices are real, normal matrices. This can be checked by:

A.conjugate() == A, B.conjugate() == B, A*A.T == A.T*A, B*B.T == B.T*B

(True, True, True, True)

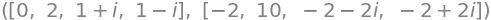

We are furthermore informed that the eigenvalues are, respectively:

A.eigenvals(multiple=True), B.eigenvals(multiple=True)

Question a#

Will the following SymPy commands give us the matrices involved in the spectral decompositions of \(A\) and \(B\)? The call A.diagonalize(normalize=True) returns \((V, \Lambda)\), where \(A = V \Lambda V^{-1}\), with normalized eigenvectors in \(V\) and the eigenvalues of \(A\) being the diagonal elements in the diagonal matrix \(\Lambda\) (according to the eigenvalue problem studied in Mathematics 1a).

A.diagonalize(normalize = True), B.diagonalize(normalize = True)

Question b#

Does a unitary matrix that diagonalizes both \(A\) and \(B\) exist? Meaning, does one unitary matrix exist such that \(A = U \Lambda_1 U^*\) and \(B = U \Lambda_2 U^*\), where \(\Lambda_1\) is a diagonal matrix consisting of the eigenvalues of \(A\) and where \(\Lambda_2\) is a diagonal matrix consisting of the eigenvalues of \(B\)?

Hint

How are the two unitary matrices from the previous question related?

Answer

Both unitary matrices from the previous question work.

Question c#

You have seen the matrix \(U\), or maybe \(U^*\), before (possibly with its columns in a different order). What kind of matrix is this?

Answer

\(U\) is the so-called Fourier matrix; see this example in the textbook. In the exercise 4: Unitary Matrices, we specifically considered the \(4 \times 4\) Fourier matrix \(F_4\). The matrices \(A\) and \(B\) are so-called circulant matrices, which serve as mapping matrices for (periodic) convolution, used in, for example, convolutional neural networks (CNNs). All such matrices have the Fourier matrix as their eigenvector matrix \(U\).

6: Diagonalization and Reduction of Quadratic Forms#

We consider the function \(q : \mathbb{R}^3 \to \mathbb{R}\) given by

Note that \(q\) can be split into two parts: a part containing purely the quadratic terms: \(k(x,y,z)=-2x^2-2y^2-2z^2+2xy+2xz-2yz\), and a part with the remaining terms, which is a linear polynomial: \(2x+y+z+5\).

We are given the symmetric matrix

Question a#

Provide a real, orthogonal matrix \(Q\) and a diagonal matrix \(\Lambda\), such that

You must choose \(Q\) such that it has \(\operatorname{det}\,Q=1\). You may use SymPy for this exercise.

Note

Real, orthogonal matrices always have \(\operatorname{det} Q = \pm 1\) (why do you think that is?). If your chosen \(Q\) has \(\operatorname{det} Q = -1\), you can simply change the sign of any column or row. Real orthogonal matrices with \(\operatorname{det} Q = 1\) are sometimes called properly oriented. In \(\mathbb{R}^3\), this simply means that the orthonormal basis which constitutes the columns in \(Q\) forms a right-handed coordinate system. This does not play a significant role for us in this problem.

Hint

With SymPy, you can see that \(A\) has \(-4\) as a single-eigenvalue while it has \(-1\) as a double-eigenvalue. Therefore, some work is required to find an orthonormal basis for \(\mathbb{R}^3\) consisting of eigenvectors of \(A\).

Hint

You can use SymPy’s GramSchmidt command on the eigenvectors from A.eigenvects(). But maybe you more solve this quicker using educated “guesses”: Choose an eigenvector from the eigenspace \(E_{-4}\) and one from \(E_{-1}\). Guess a third vector that is orthogonal to both. Normalize them all, and you now have three usable, orthonormal vectors to construct your \(Q\) from.

Answer

There are several fitting choices, and we have here chosen:

\(Q=\begin{bmatrix} -\frac{\sqrt 3}{3} & \frac{\sqrt 2}{2} & \frac{\sqrt 6}{6} \\ \frac{\sqrt 3}{3} & 0 & \frac{\sqrt 6}{3} \\ \frac{\sqrt 3}{3} & \frac{\sqrt 2}{2} & -\frac{\sqrt 6}{6} \end{bmatrix}\) and \(\Lambda=\begin{bmatrix} -4 & 0 & 0 \\ 0 & -1 & 0 \\ 0 & 0 & -1 \end{bmatrix}.\)

Question b#

State the functional expression of \(k(x,y,z),\) convert it to matrix form, and reduce it.

Hint

To reduce means: Find a (properly oriented) orthonormal basis for \(\mathbb{R}^3\) with respect to which the expression for \(k\) contains no mixed terms.

Hint

You have probably noticed that the matrix involved in the matrix form of \(k(x, y, z)\) is identical to \(A\), so you can simply use the basis with its corresponding diagonalization that we found above.

Answer

If we choose the columns in the found \(Q\) as a new orthonormal basis \(\beta\), we obtain the following reduction of \(k:\)

Question c#

Find a properly oriented orthonormal basis for \(\mathbb{R}^3\) in which the formula for \(q\) has no mixed terms. Determine the functional expression.

Hint

We will use the orthonormal basis \(\beta\) found above. Thus the quadratic form is taken care of. Now the question is simply how the first-degree polynomial involved in \(q\) looks with respect to that new basis.

Hint

Use \(Q = {}_e[id]_\beta\) found above as the change-of-basis matrix, where \(e\) denotes the standard basis. Note that \(Q^T = {}_{\beta}[id]_e\). The notation \({}_{\beta}[id]_e\) is used for the change-of-basis matrix that switches from \(e\) basis to \(\beta\) basis - we will also be writing this with the notation: \({}_{\beta}M_e\).

Answer

If we choose the columns in the found \(Q\) as our new orthonormal basis \(q\), then \(q\) gets the following form:

7: The Partial Derivative Increases the most in the Gradient Direction#

This exercise is taken from the textbook, and the goal is to argue why in the gradient method, one moves in the direction of the gradient vector.

Let \(f: \mathbb{R}^{n} \to \mathbb{R}\) be a function for which all directional derivatives exist at \(\pmb{x} \in \mathbb{R}^{n}\). Assume that \(\nabla f(\pmb{x})\) is not the zero vector.

Question a#

Show that \(\pmb{u} := \nabla f(\pmb{x}) / \Vert \nabla f(\pmb{x}) \Vert\) is a unit vector.

Question b#

Show that the scalar \(|\nabla_{\pmb{v}}f(\pmb{x})|\) becomes largest possible when \(\pmb{v} = \pm \pmb{u}\).

Hint

Remember that \(\nabla_{\pmb{v}}f(\pmb{x})) = \langle \pmb{v}, \nabla f (\pmb{x}) \rangle\). What do you get if you substitute in \(\pmb{u} = \nabla f(\pmb{x}) / \Vert \nabla f(\pmb{x}) \Vert\)?

Hint

Use Cauchy/Schwarz’s inequality to argue that \(|\nabla_{\pmb{v}}f(\pmb{x})|\) cannot become larger than this.

Answer

From the Cauchy-Schwarz’s inequality we see, firstly, that \(| \nabla_{\pmb{v}}f(\pmb{x})) |= | \langle \pmb{v}, \nabla f (\pmb{x}) \rangle | \le \Vert \pmb{v} \Vert \, \Vert \nabla f (\pmb{x})\Vert = \Vert \nabla f (\pmb{x})\Vert\) for all unit vectors \(\pmb{v}\). With \(\pmb{v} = \nabla f(\pmb{x}) / \Vert \nabla f(\pmb{x}) \Vert\) we have

which equals its maximal value.

8: Standard Equations for the Three Typical Conic Sections#

In the following examples, we consider quadratic forms without mixed terms (since we can eliminate these through diagonalization, as in the previous exercise). Here, it is possible to take it a step further and remove the first-degree terms as well. This technique is called completing the square. In the following, we will use this technique in order to identify so-called conic sections.

Question a#

An ellipse in the \((x, y)\) plane with center \((c_1, c_2)\), semi-axes \(a\) and \(b\), and symmetry axes \(x = c_1\) and \(y = c_2\) has the standard equation

An ellipse is given by the equation

Complete the square, put the equation in standard form, and specify the ellipse’s center, semi-axes and symmetry axes.

Hint

Plot the given equation with the SymPy command dtuplot.plot_implicit and check your results.

Answer

Center at \((-1,3).\) Semi-axes are \(a=1,b=2\). Symmetry axes are \(x=-1,y=3.\)

Question b#

A hyperbola in the \((x,y)\) plane with center \((c_1,c_2),\) semi-axes \(a\) and \(b\), and symmetry axes \(x=c_1\) and \(y=c_2\) has the standard equation

Alternatively (if it isn’t horizontally but vertically oriented):

A hyperbola is given by the equation

Complete the square, put the equation in standard form, and specify the hyperbola’s center, semi-axes and symmetry axes.

Hint

Plot the given equation with the SymPy command dtuplot.plot_implicit and check your results.

Answer

Center at \((2,-2).\) Semi-axes are \(a=2,b=2\). Symmetry axes are \(x=2,y=-2.\)

Question c#

A parabola in the \((x, y)\) plane with its vertex (stationary point) at \((c_1, c_2)\) and symmetry axis \(x = c_1\) has the standard equation

Alternatively, if the parabola is not vertically but horizontally oriented, in which case the symmetry axis becomes \(y=c_2\):

A parabola is given by the equation

Complete the square, put the equation in standard form, and specify the parabola’s vertex and symmetry axis.

Hint

Plot the given equation with the SymPy command dtuplot.plot_implicit and check your results.

Answer

Vertex at \((-3,-1).\) Symmetry axis is: \(x=-3.\)

Theme Exercise – Short Day#

This week the Short Day will be dedicated to Theme 2: Data Matrices and Dimensional Reduction.